CJ's AI Toolbox

All the tools and skills you need for your AI Toolbox.

Contents:

What Does Artificial Intelligence Really Mean?:

Intelligence in humans is not a real thing that boils down so any single measurable dimension. In practice, measures of intelligence or aptitude in a person merely test for proximity to privilege and alignment with the goals of those in power. In humans, a lack of "intelligence" is either pathologized or criminalized. A person who lacks privilege and/or alignment with the goals of those in power is considered deviant. Being classified as useless to those in power is typically done by labeling a person with either a crime or a sickness.

It's exactly the same with artificial intelligence. The "intelligence" of artificial intelligence is the ability of software to take actions that are in alignment with the goals of those in power. Sam Altman defines AGI as AI that can return $100b in profits..

Terms like Artificial General Intelligence (AGI) are therefore essentially meaningless. The question of machine intelligence is merely the question of whether the machine can make decisions that align with the goals of those in power over it.

By the way, this was the otpic of my graduate thesis if you want to read more: my graduate thesis on this topic.

When we talk about intelligent machines, we're talking about the ability of machines to make the correct decisions, as defined by those in power.

Yes this counts;

if(){

if(){

if(){

}

}

}

Machine Learning (A Type of Artificial Intelligence):

Software that can learn how to make decisions.

Soviet cyberneticists changed the world when they invented the first ever software that could learn. The path from there to here is long with many forks and twists. Let's take a look at the major categories of modern artificial intelligence algorithms and examples of how each can become a tool in your toolbox.

- Supervised Learning: Tools that learn based on being told what to learn.

- Unsupervised Learning: Tools that learn without being told what to learn.

- Reinforcement Learning: Tools that learn based on rewards and punishments over time.

Supervised Learning:

Remember; supervised learning means tools that learn based on being told what to learn.

If you have separate examples of what you want the AI to learn how to do and what you want it to learn not to do, then supervised learning is probably the umbrella of techniques you're looking for.

For Simple Linear Relationships:

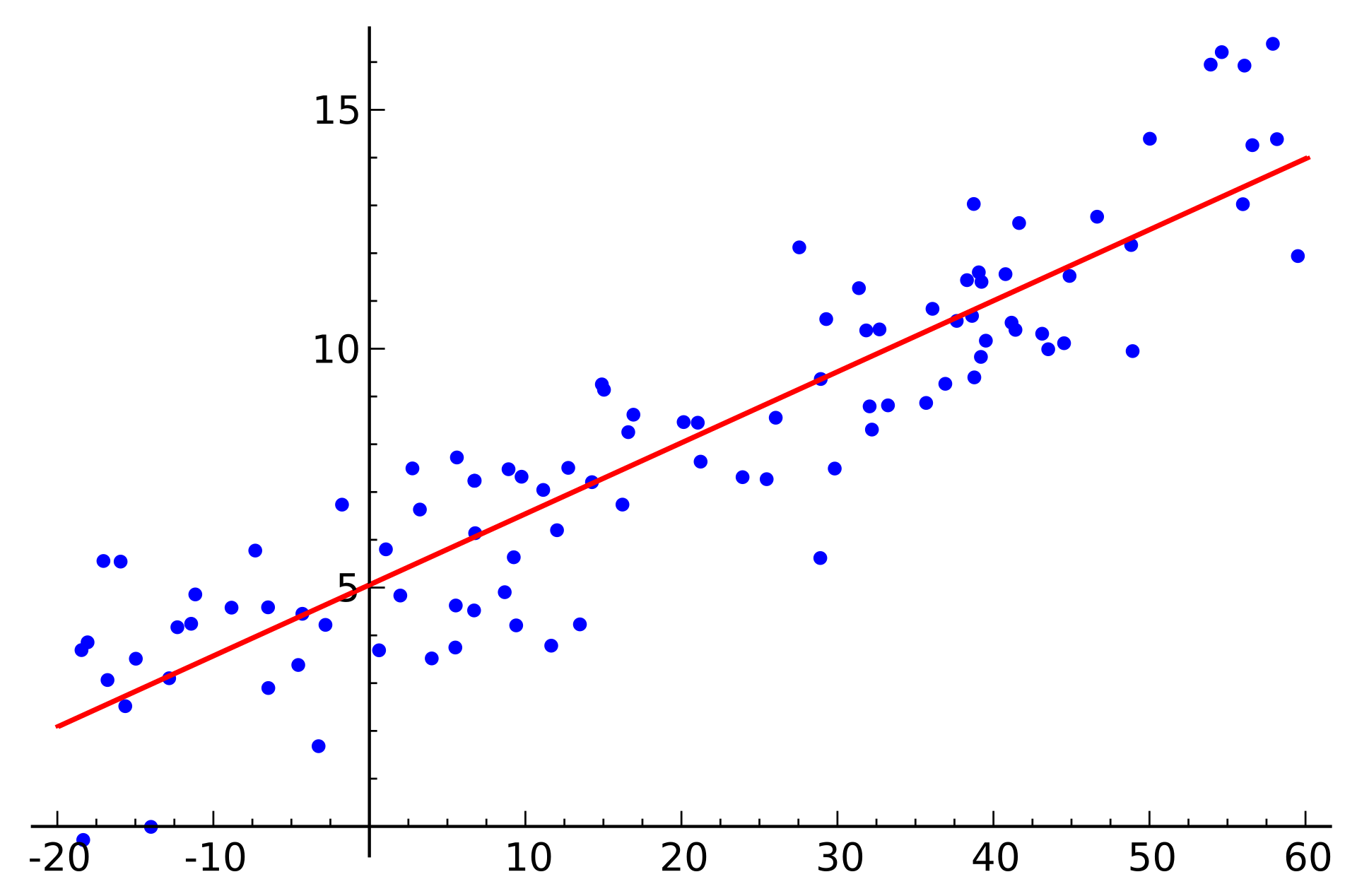

Linear Regression: This technique looks for a corelation and uses it to predict future data.

For Complex Linear Relationships

If you think you have most of the relevant data to be able to connect cause and effect, and there probably aren't a lot of significant unknowns:

ANOVA: this technique identifies the relationships between a lot of variables to find how they influence a single dependent variable.

Example: learn to predict covid mortality rate by state based on average wealth and voting trends.

MANOVA: this technique identifies the relationships between a lot of variables to find how they influence a group of dependent variables.

Example: You're buying ads on Google, TikTok, and Facebook. You want to find out how much of the actual sales are coming from the money you're spending on each ad network.

MANCOVA: this technique identifies the relationships between a lot of variables to find how they influence a group of dependent variables, while controlling for the influence of other variables.

Example: For each state, look at the degree of racial segregation between the major racial groups plus the average amount of money someone makes, plus the rate of excess deaths, and learn to predict the amount of economic growth and the percent of people who make a living wage. Use average education level to isolate social determinants and control for education.

If you know there are many complex unknown factors and you want to predict a single value:

Example: If you want to predict changes in egg prices based on inflation, feed costs, cull rates, etc.

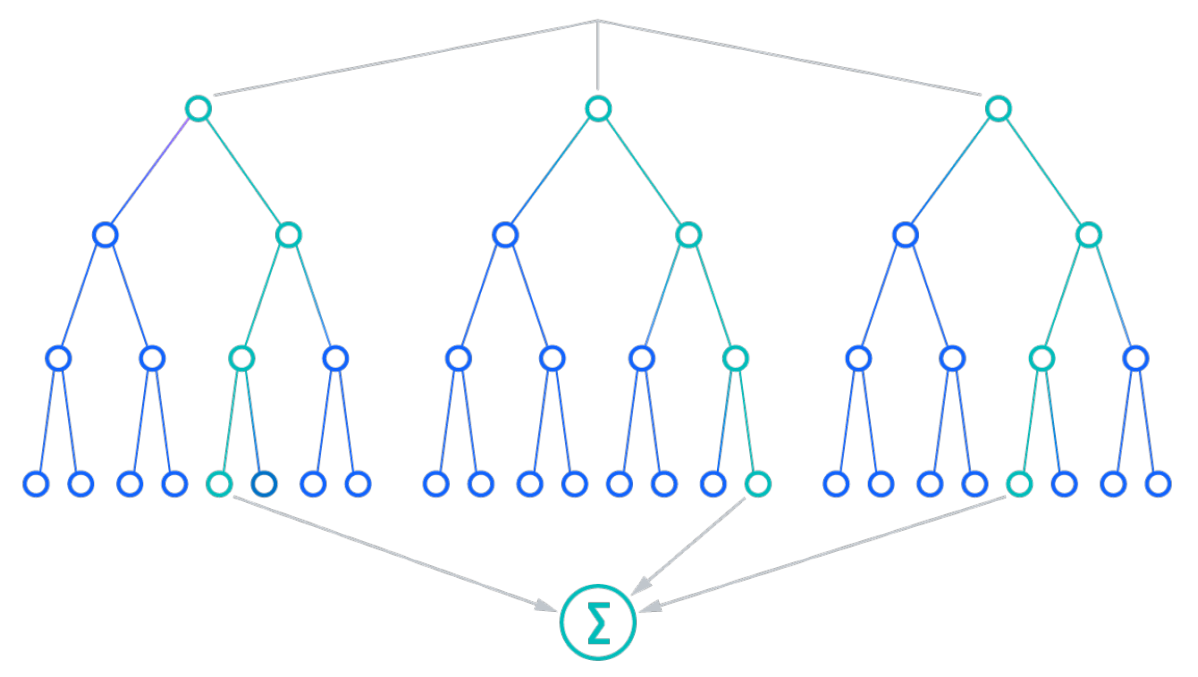

Random Forest Regression: Creates many small models of relationships in the input data. These models work together to predict future results.

For Tabular data with labels

Random Forest Decision Trees: If your problem can be put into a spreadsheet of examples where you want to predict a missing column in a new row, based on the relationships between the same columns in past data, this is the tool for you.

Predict Changes Over Time

Example: If you want to predict end of month change in retail egg prices based on daily changes in feed costs, inflation, wholesale egg prices, cull rates, etc.

ARIMA: This simple approach looks for patterns in the data related to change over time. It can be used to predict future data based on historical data.

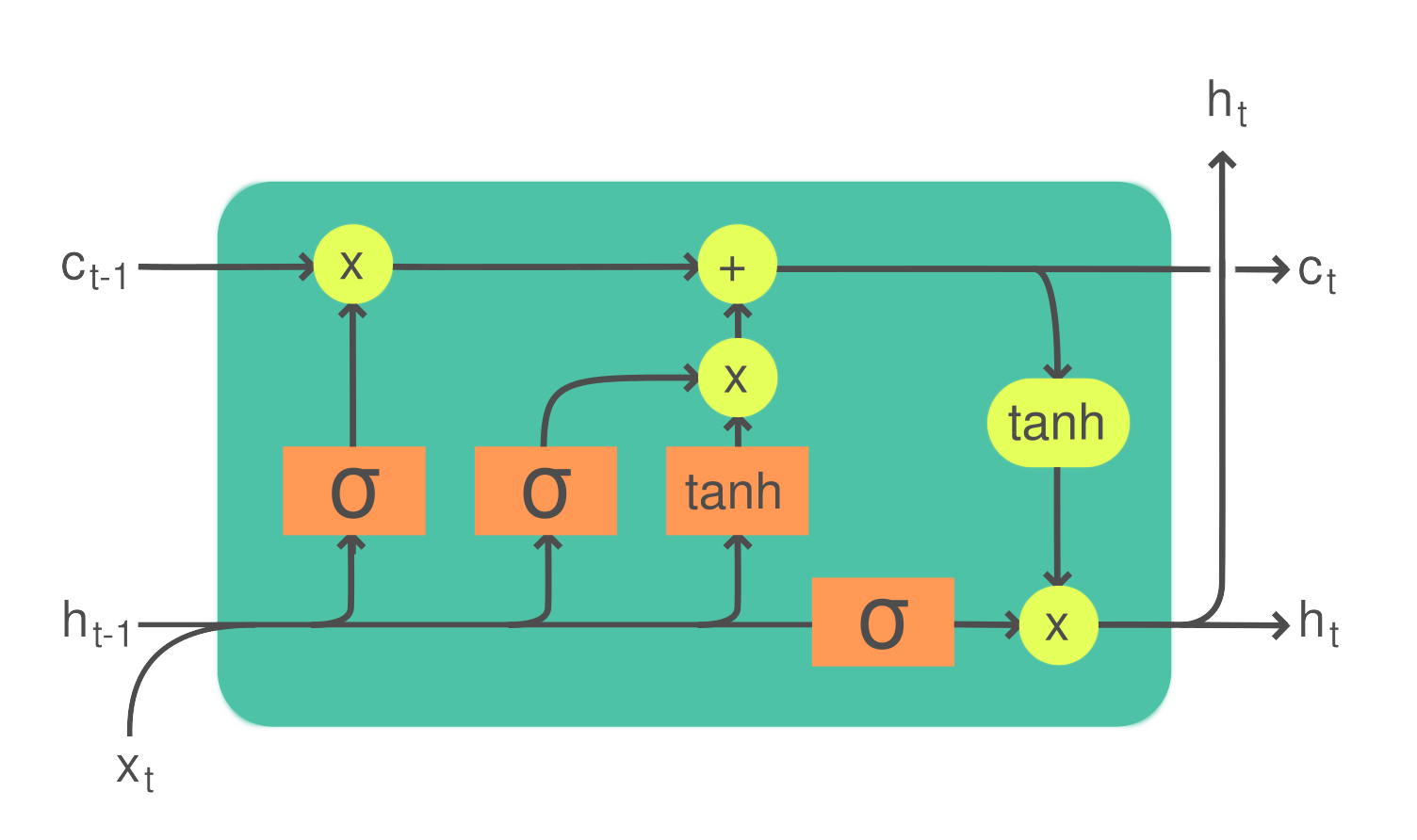

LSTM: This more complex approach looks for patterns in the data related to change over time. It can be used to predict future data based on historical data. This approach is more challenging, but somtimes it can give better results than ARIMA when there are many complex unknowns.

Identify Objects In Images/Videos

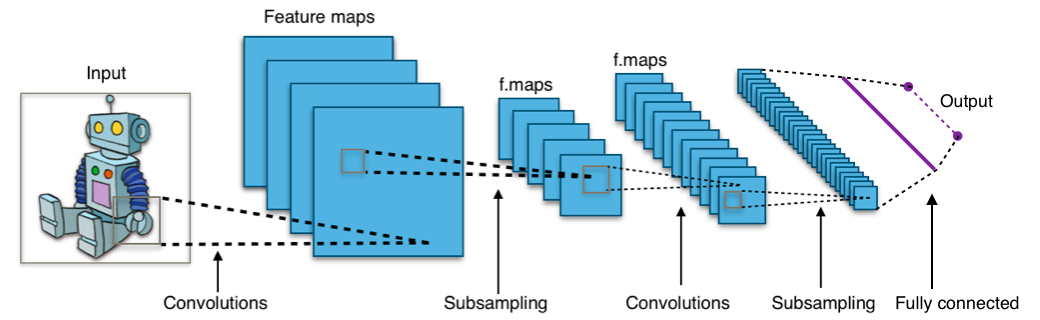

Convolutional Neural Nets (CNNs): Convolutional Neural Nets are the only approach to machine vision.

Check out this hilarious exchange between a child on twitter and one of the world's foremost experts on artificial intelligence, Yann Lecun:

Unsupervised Learning:

Remember unsupervised learning means software that learns without being told what to learn.

If you have examples that you want the AI to understand in order to make more general predictions without specific kinds of answers being right or wrong, this is what you're looking for.

Generative: Create New Things By Understanding Examples

Example: You want to download the entire internet and use it to build a model that can generate art or act as a chatbot you can rent out to the world until someone makes a free alternative that works better.

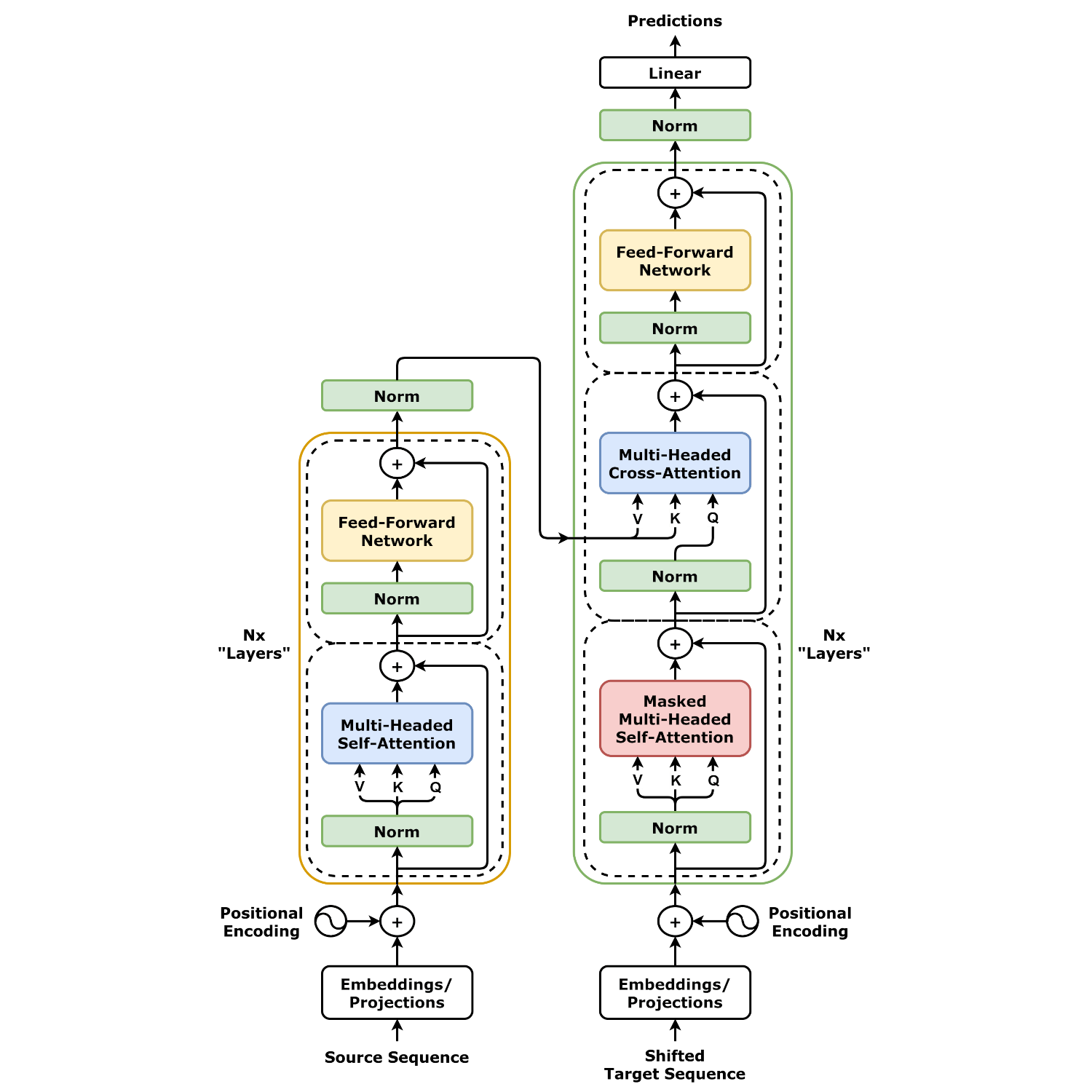

Large Language Models (LLMs): Large language models learn from large amounts of text how to predict what word should come next. They also have some limited ability to internally represent complex ideas in order to do next-word prediction.

This is very helpful for doing your homework or tutoring people on a complex topic, but no one has yet found a way to make money with transformer models. There are some theories about how tools built on top of LLMs such as agentic systems could potentially lead to eventual profitability.

Since my days as a product tester working on ChatGPT before it was publicly available, I have felt LLMs are more likely to simply become a generic open-source tool which improves impact for people who understand how to use them, but tht they will not constitute a profitable business unto themselves.

Diffusion Models: Diffusors create images and video from a prompt based on looking at many examples and their text descriptions. They can also modify or edit existing photos and videos.

Descriminative: Separating Datasets Into Clusters Or Groups Of Similar Data

Understanding Clusters

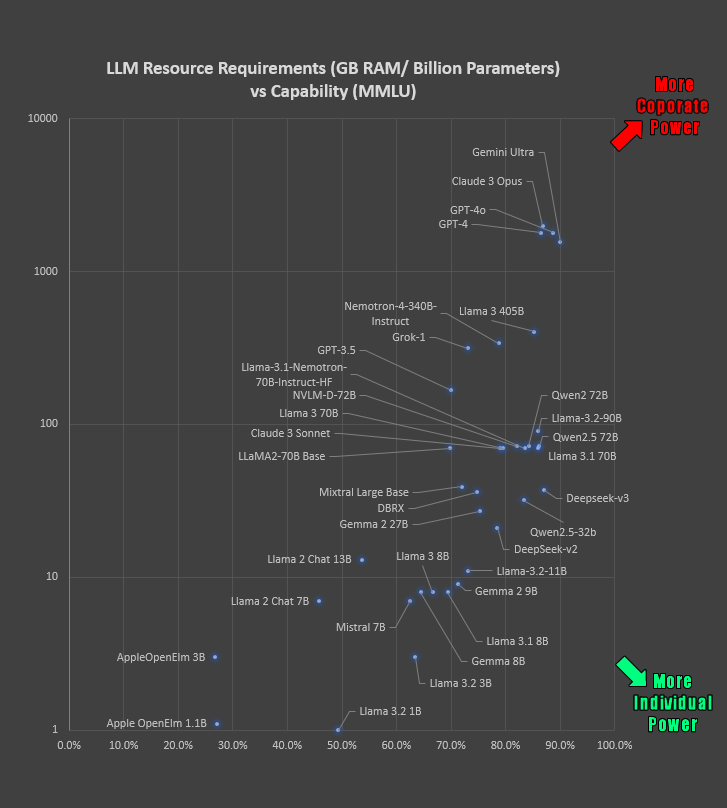

Example: There are a lot of large language models of radically different size and capabilities. How are they diverging into types and converging towards specific use cases?

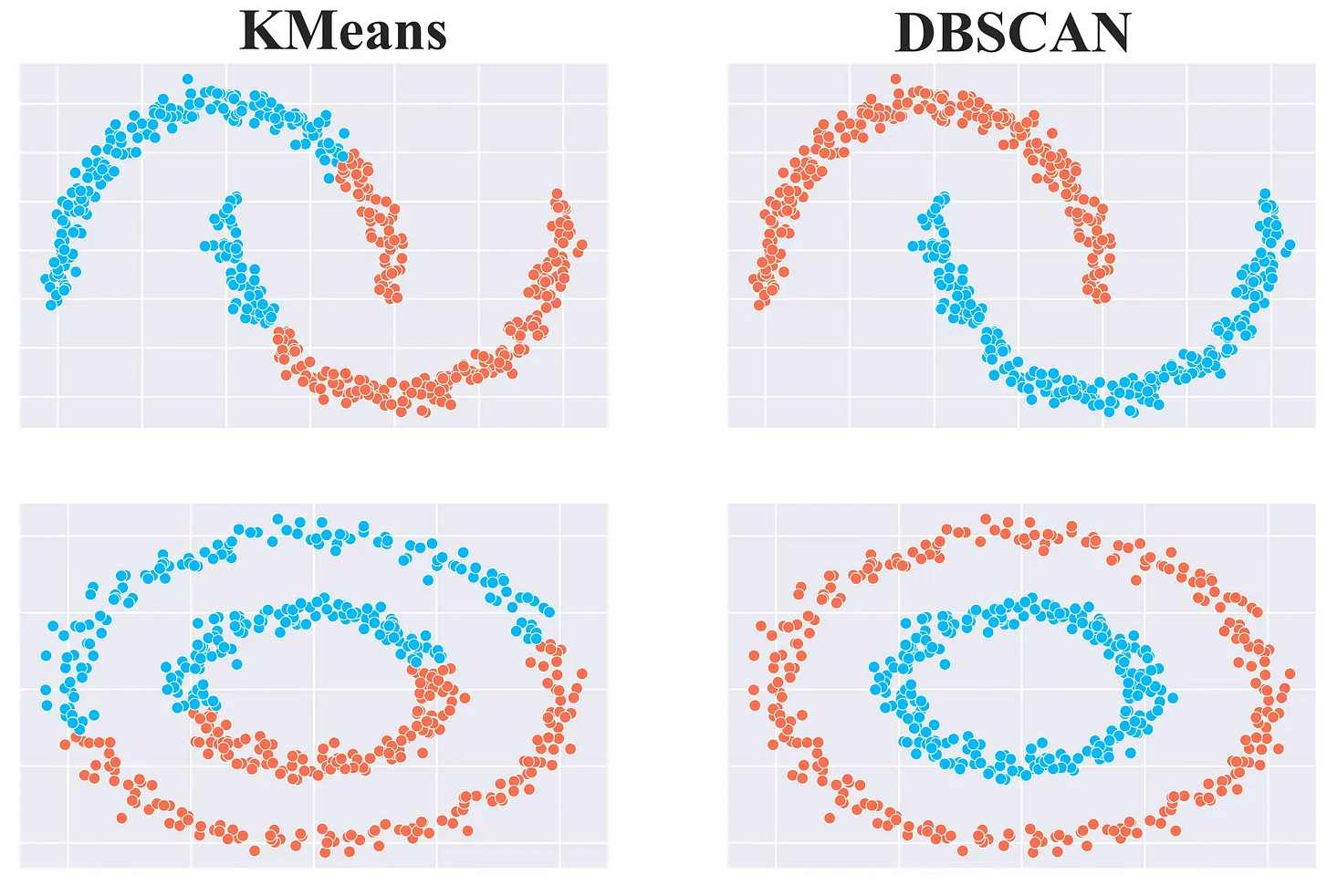

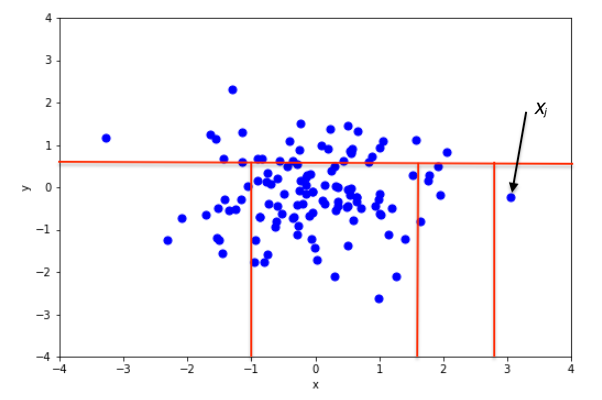

K-Means Clustering: This technique learns how to identify boundaries between groups in a dataset and separate the data into groups of related items based on distance from center points.

DBSCAN: is similar to K-Means clustering except instead of looking at the distance from a central point, it looks at the density as it spreads out, so it identifies irregular shapes better than K-Means Clustering which looks for circular shapes.

Neither approach is better or worse. Each is better in different kinds of situations.

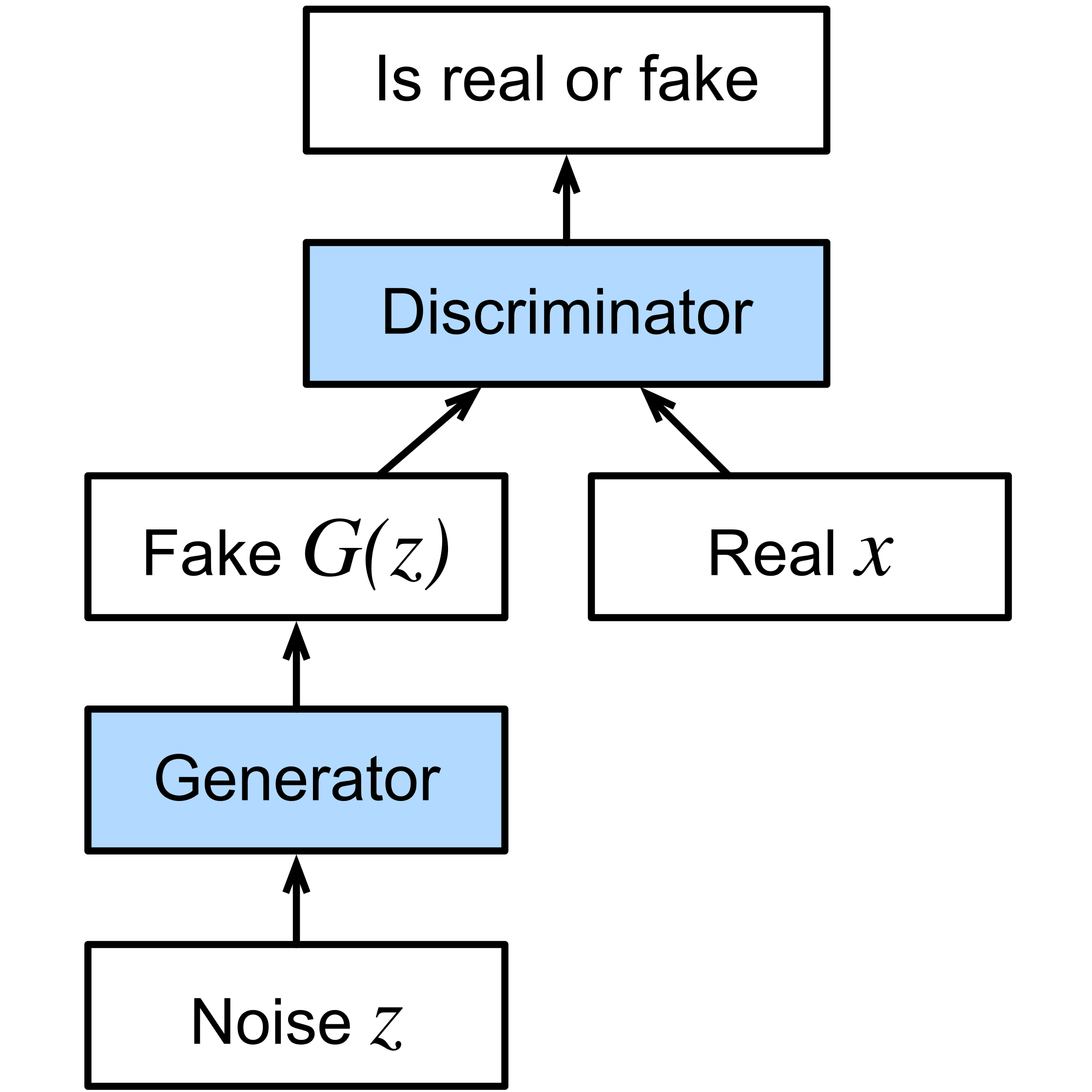

Generative Adversarial Networks (GANs)

A single AI with both a generative and a descriminative side, where two internal models are forced to compete for rewards. This is an extremely effective strategy for solving problems that require devloping strategies.

Example: GANs are extremely effective at learning how to play games. They often invent new strategies no one has ever seen.

Example: GANs are also good at learning how to control robots and react to the environment around them.

Isolation Forest: finds outliers in complex data. This is enormously valuable for anomaly detection, for example in order to identify credit card fraud. Isolation Forests have applications in essentially every field and business.

Reinforcement Learning:

Software that continuously learns based on rewards and punishments over time.

All the models up to this point in the list are trained and then set in stone as it were. They do not continue to learn once training is over except by repeating the training process to create a new version of the model. Reinforcement learning is an approach where the algorithm can continuously learn as it goes along and update its model in real time.

Playing The Odds

When you know the odds of many things that are related to some set of outcomes, and you want to find the odds of one specific combination of outcomes.

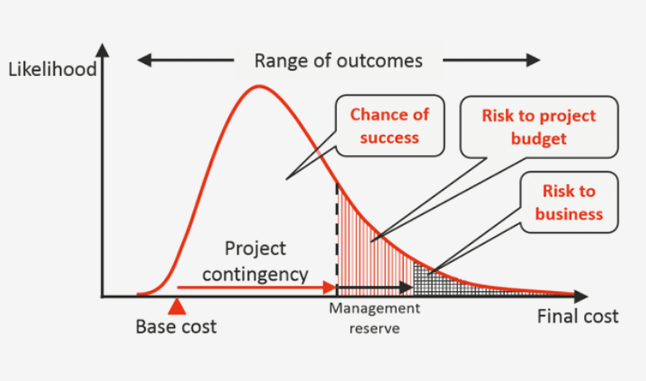

Think of Monte Carlo as brute forcing the combinations between sets of statistics to find some large number of possible outcomes and then judge which one is the most likely.

Nate Silver's 538 is probably the most famous example of a Monte Carlo model.

538 combines the results of many election polls, plus their sampling confidence data, plus 538's opinion about the reputation of the pollster to produce the dataset.

This dataset is put through a Monte Carlo in order to predict the outcomes of elections.

Monte Carlo, like all statistical models, is a model of the data, not a model of the world. Like with all models, the accuracy of the predictions it makes depends on the accuracy of the sample data being fed into the model.