CJ Trowbridge

32GB-DDR5 Ollama Server Based On OrangePi 6+

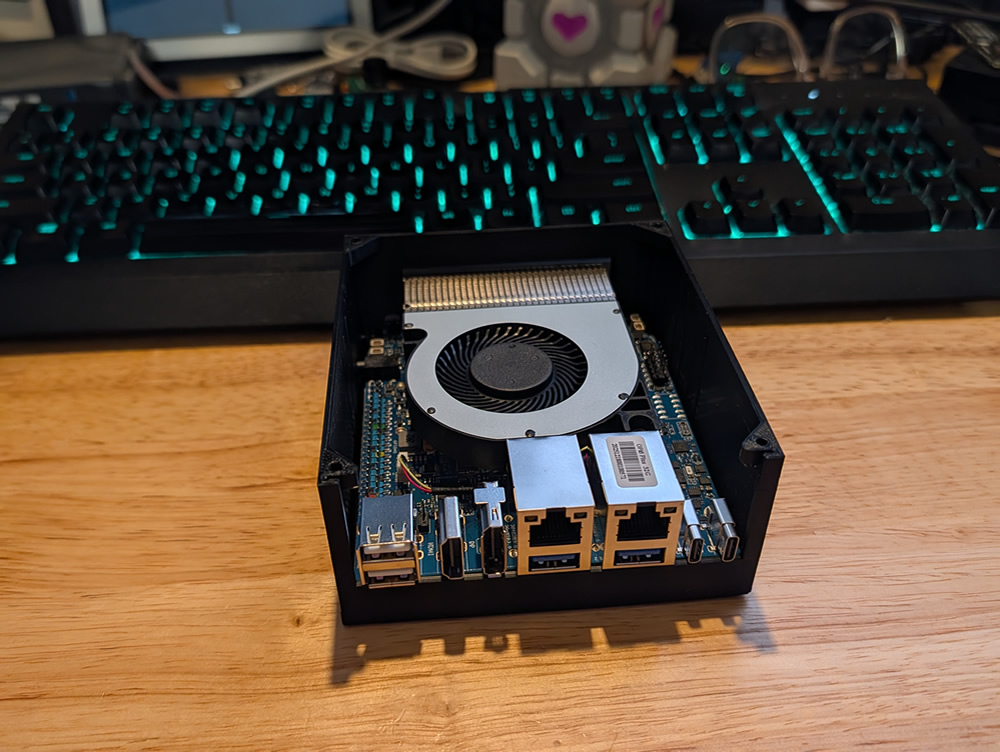

I built a compact local LLM server based on an Orange Pi 6+ with 32GB DDR5, running Ollama for offline inference and tooling.

Goals:

- Small and power-efficient

- Quiet enough to live on a shelf

- Simple deploy + updates

- Optional NVMe storage

Parts List

- Orange Pi 6+ (32GB DDR5)

- 3D Printed Case

- Evo SD Card These are fast and reliable for the OS. You can also use an NVMe drive for storage, but disk speed is really not as big of a bottleneck as RAM for LLM inference, so I went with a large SD card for simplicity.

Process

- Assemble Hardware: Install the Orange Pi 6+ into the 3D printed case, ensuring proper cooling and access to ports. I am leaving the top off because it looks cooler. 😎

- Install Ubuntu Server for Arm: available here

- Setup Docker and Ollama: I put this simple script together which does all the work for you.

- Connect Client: Here are some of the most popular clients for connecting to your Ollama server:

- OpenWebUI A web interface just like ChatGPT, but self-hosted and customizable.

- LM Studio A desktop app for managing and chatting with your local LLMs. You can connect it to your Ollama server or use it locally on your machine.

- Ollama CLI

- Ollama Python SDK

- Ollama Web UI